Research

My work has mainly been on learning representations for significantly faster reinforcement learning. I have also collaborated on other topics in RL and imitation learning.

Addressing Sample Complexity in Visual Tasks Using HER and Hallucinatory GANs

(NeurIPS '19) [paper] [code]

Himanshu Sahni, Toby Buckley, Pieter Abbeel, Ilya Kuzovkin

ABSTRACT: Reinforcement Learning (RL) algorithms typically require millions of environment interactions to learn successful policies in sparse reward settings. Hindsight Experience Replay (HER) was introduced as a technique to increase sample efficiency by reimagining unsuccessful trajectories as successful ones by altering the originally intended goals. However, it cannot be directly applied to visual environments where goal states are often characterized by the presence of distinct visual features. In this work, we show how visual trajectories can be hallucinated to appear successful by altering agent observations using a generative model trained on relatively few snapshots of the goal. We then use this model in combination with HER to train RL agents in visual settings. We validate our approach on 3D navigation tasks and a simulated robotics application and show marked improvement over baselines derived from previous work.

Attention Driven Dynamic Memory Maps

(Bridging AI and Cognitive Science Workshop, ICLR '20) [paper]

Himanshu Sahni, Shray Bansal, Charles Isbell

ABSTRACT: In order to act in complex, natural environments, biological intelligence has developed attention to collect limited informative observations, a short term memory to store them, and the ability to build live mental models of its surroundings. We mirror this concept in artificial agents by learning to 1) guide an attention mechanism to the most informative parts of the state, 2) efficiently represent state from a sequence of partial observations, and 3) update unobserved parts of the state through learned world models. Key to this approach is a novel short-term memory architecture, the Dynamic Memory Map (DMM), and an adversarially trained attention controller. We demonstrate that our approach is effective in predicting the full state from a sequence of partial observations. We also show that DMMs can be used for control, outperforming baselines in two partially observable reinforcement learning tasks.

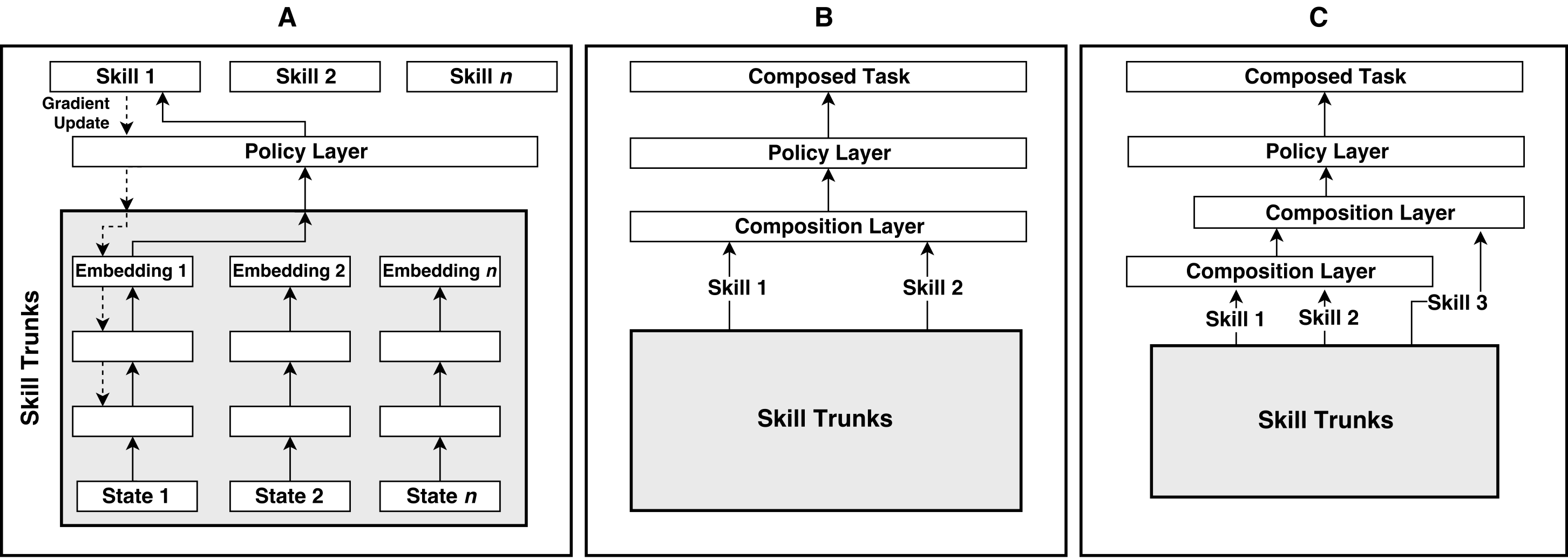

Learning to Compose Skills

(Deep Reinforcement Learning Symposium, NeurIPS '17) [paper][code]

Himanshu Sahni, Saurabh Kumar, Farhan Tejani, Charles Isbell

ABSTRACT: We present a differentiable framework capable of learning a wide variety of compositions of simple policies that we call skills. By recursively composing skills with themselves, we can create hierarchies that display complex behavior. Skill networks are trained to generate skill-state embeddings that are provided as inputs to a trainable composition function, which in turn outputs a policy for the overall task. Our experiments on an environment consisting of multiple collect and evade tasks show that this architecture is able to quickly build complex skills from simpler ones. Furthermore, the learned composition function displays some transfer to unseen combinations of skills, allowing for zero-shot generalizations.

Publications

Himanshu Sahni and Charles Isbell. "Hard Attention Control By Mutual Information Maximization". ArXiv '21.

Himanshu Sahni, Shray Bansal, and Charles Isbell. "Attention Driven Dynamic Memory Maps". Bridging AI and Cognitive Science (Workshop ICLR '20).

Ashley D Edwards, Himanshu Sahni, Rosanne Liu, Jane Hung, Ankit Jain, Rui Wang, Adrien Ecoffet, Thomas Miconi, Charles Isbell, and Jason Yosinski. "Estimating Q (s, s') with Deep Deterministic Dynamics Gradients". International Conference on Machine Learning (ICML '20).

Himanshu Sahni, Toby Buckley, Pieter Abbeel, and Ilya Kuzovkin. "Addressing Sample Complexity in Visual Tasks Using HER and Hallucinatory GANs". Neural Information Processing Systems (NeurIPS '19).

Ashley D Edwards, Himanshu Sahni, Yannick Schroecker, and Charles L Isbell. "Imitating latent policies from observation". International Conference on Machine Learning (ICML '19).

Himanshu Sahni, Saurabh Kumar, Farhan Tejani, and Charles Isbell. "Learning to Compose Skills". Deep Reinforcement Learning Symposium (Workshop NeurIPS '17).

Himanshu Sahni, Saurabh Kumar, Farhan Tejani, Yannick Schroecker, and Charles Isbell. "State Space Decomposition and Subgoal Creation for Transfer in Deep Reinforcement Learning." Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM '17).

Himanshu Sahni, Brent Harrison, Kaushik Subramanian, Thomas Cederborg, Charles Isbell and Andrea Thomaz. "Policy Shaping in Domains with Multiple Optimal Policies." Autonomous Agent & Multiagent Systems (AAMAS '16).

Zahoor Zafrulla, Himanshu Sahni, Abdelkareem Bedri, and Pavleen Thukral. "Hand Detection in American Sign Language Depth Data Using Domain-Driven Random Forest Regression." Face & Gesture (FG '15).

Himanshu Sahni, Abdelkareem Bedri, Gabriel Reyes, Pavleen Thukral, Zehua Guo, Thad Starner, and Maysam Ghovanloo. "The tongue and ear interface: a wearable system for silent speech recognition." International Symposium on Wearable Computers (ISWC '14) (Best paper nominee).

B. Vashishta, M. Garg, R. Chaudhary, H. Sahni, R. Khanna, and A. S. Rathore. "Use of Computational Fluid Dynamics for Development and Scale-Up of a Helical Coil Heat Exchanger for Dissolution of a Thermally Labile API." Organic Process Research & Development (OPRD '13).